The article begins by formulating a problem regarding how to extract emails from any website using Python, gives you an overview of solutions, and then goes into great detail about each solution for beginners.

At the end of this article, you will know the results of comparing methods of extracting emails from a website. Continue reading to find out the answers.

You may want to read out the disclaimer on web scraping here:

Recommended Tutorial: Is Web Scraping Legal?

Recommended Tutorial: Is Web Scraping Legal?

You can find the full code of both web scrapers on our GitHub here.

Problem Formulation

Marketers build email lists to generate leads.

Statistics show that 33% of marketers send weekly emails, and 26% send emails multiple times per month. An email list is a fantastic tool for both company and job searching.

For instance, to find out about employment openings, you can hunt up an employee’s email address of your desired company.

However, manually locating, copying, and pasting emails into a CSV file takes time, costs money, and is prone to error. There are a lot of online tutorials for building email extraction bots.

When attempting to extract email from a website, these bots experience some difficulty. The issues include the lengthy data extraction times and the occurrence of unexpected errors.

Then, how can you obtain an email address from a company website in the most efficient manner? How can we use robust programming Python to extract data?

Method Summary

This post will provide two ways to extract emails from websites. They are referred to as Direct Email Extraction and Indirect Email Extraction, respectively.

Our Python code will search for emails on the target page of a given company or specific website when using the direct email extraction method.

Our Python code will search for emails on the target page of a given company or specific website when using the direct email extraction method.

For instance, when a user enters “www.scrapingbee.com” into their screen, our Python email extractor bot scrapes the website’s URLs. Then it uses a regex library to look for emails before saving them in a CSV file.

The second method, the indirect email extraction method, leverages Google.com’s Search Engine Result Page (SERP) to extract email addresses instead of using a specific website.

The second method, the indirect email extraction method, leverages Google.com’s Search Engine Result Page (SERP) to extract email addresses instead of using a specific website.

For instance, a user may type “scrapingbee.com” as the website name. The email extractor bot will search on this term and return the results to the system. The bot then stores the email addresses extracted using regex into a CSV file from these search results.

In the next section, you will learn more about these methods in more detail.

In the next section, you will learn more about these methods in more detail.

These two techniques are excellent email list-building tools.

The main issue with alternative email extraction techniques posted online, as was already said, is that they extract hundreds of irrelevant website URLs that don’t contain emails. The programming running through these approaches takes several hours.

Discover our two excellent methods by continuing reading.

Solution

Method 1 Direct Email Extraction

This method will outline the step-by-step process for obtaining an email address from a particular website.

Step 1: Install Libraries.

Using the pip command, install the following Python libraries:

- You can use Regular Expression (

re) to match an email address’s format. - You can use the

requestmodule to send HTTP requests. bs4is a beautiful soup for web page extraction.- The

dequemodule of thecollectionspackage allows data to be stored in containers. - The

urlsplitmodule in theurlibpackage splits a URL into four parts. - The emails can be saved in a DataFrame for future processing using the

pandasmodule. - You can use

tldlibrary to acquire relevant emails.

pip install re

pip install request

pip install bs4

pip install python-collections

pip install urlib

pip install pandas

pip install tld

Step 2: Import Libraries.

Import the libraries as shown below:

import re

import requests

from bs4 import BeautifulSoup

from collections import deque

from urllib.parse import urlsplit

import pandas as pd

from tld import get_fld

Step 3: Create User Input.

Ask the user to enter the desired website for extracting emails with the input() function and store them in the variable user_url:

user_url = input("Enter the website url to extract emails: ")

if "https://" in user_url: user_url = user_url

else: user_url = "https://"+ user_url

Step 4: Set up variables.

Before we start writing the code, let’s define some variables.

Create two variables using the command below to store the URLs of scraped and un-scraped websites:

unscraped_url = deque([user_url])

scraped_url = set()

You can save the URLs of websites that are not scraped using the deque container. Additionally, the URLs of the sites that were scraped are saved in a set data format.

As seen below, the variable list_emails contains the retrieved emails:

list_emails = set()

Utilizing a set data type is primarily intended to eliminate duplicate emails and keep just unique emails.

Let us proceed to the next step of our main program to extract email from a website.

Step 5: Adding Urls for Content Extraction.

Web page URLs are transferred from the variable unscraped_url to scrapped_url to begin the process of extracting content from the user-entered URLs.

while len(unscraped_url): url = unscraped_url.popleft() scraped_url.add(url)

The popleft() method removes the web page URLs from the left side of the deque container and saves them in the url variable.

Then the url is stored in scraped_url using the add() method.

Step 6: Splitting of URLs and merging them with base URL.

The website contains relative links that you cannot access directly.

Therefore, we must merge the relative links with the base URL. We need the urlsplit() function to do this.

parts = urlsplit(url)

Create a parts variable to segment the URL as shown below.

SplitResult(scheme='https', netloc='www.scrapingbee.com', path='/', query='', fragment='')

As an example shown above, the URL https://www.scrapingbee.com/ is divided into scheme, netloc, path, and other elements.

The split result’s netloc variable contains the website’s name. Continue reading to learn how this procedure benefits our programming.

base_url = "{0.scheme}://{0.netloc}".format(parts)

Next, we create the basic URL by merging the scheme and netloc.

Base URL means the main website’s URL is what you type into the browser’s address bar when you input it.

If the user enters relative URLs when requested by the program, we must then convert them back to base URLs. We can accomplish this by using the command:

if '/' in parts.path: part = url.rfind("/") path = url[0:part + 1]

else: path = url

Let us understand how each line of the above command works.

Suppose the user enters the following URL:

This URL is a relative link, and the above set of commands will convert it to a base URL (https://www.scrapingbee.com). Let’s see how it works.

If the condition finds that there is a “/” in the path of the URL, then the command finds where is the last slash ”/” is located using the rfind() method. The “/” is located at the 27th position.

Next line of code stores the URL from 0 to 27 + 1, i.e., 28th item position, i.e., https://www.scrapingbee.com/. Thus, it converts to the base URL.

In the last command, If there is no relative link from the URL, it is the same as the base URL. That links are in the path variable.

The following command prints the URLs for which the program is scraping.

print("Searching for Emails in %s" % url)

Step 7: Extracting Emails from the URLs.

The HTML Get Request Command access the user-entered website.

response = requests.get(url)

Then, extract all email addresses from the response variable using a regular expression, and update them to the list_emails set.

new_emails = ((re.findall(r"\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b", response.text, re.I)))

list_emails.update(new_emails)

The regression is built to match the email address syntax displayed in the new emails variable. The regression format pulls the email address from the website URL’s content with the response.text method. And re.I flag method ignores the font case. The list_emails set is updated with new emails.

The next is to find all of the website’s URL links and extract them in order to retrieve the email addresses that are currently available. You can utilize a powerful, beautiful soup module to carry out this procedure.

soup = BeautifulSoup(response.text, 'lxml')

A beautiful soup function parses the HTML document of the webpage the user has entered, as shown in the above command.

You can find out how many emails have been extracted with the following command.

print("Email Extracted: " + str(len(list_emails)))

The URLs related to the website can be found with “a href” anchor tags.

for tag in soup.find_all("a"): if "href" in tag.attrs: weblink = tag.attrs["href"] else: weblink = ""

Beautiful soups find all the anchor tag “a” from the website.

Then if href is in the attribute of tags, then soup fetches the URL in the weblink variable else it is an empty string.

if weblink.startswith('/'): weblink = base_url + weblink

elif not weblink.startswith('https'): weblink = path + weblink

The href contains just a link to a particular page beginning with “/,” the page name, and no base URL.

For instance, you can see the following URL on the scraping bee website:

<a href="/#pricing" class="block hover:underline">Pricing</a><a href="/#faq" class="block hover:underline">FAQ</a><a href="/documentation" class="text-white hover:underline">Documentation</a>

Thus, the above command combines the extracted href link and the base URL.

For example, in the case of pricing, the weblink variable is as follows:

Weblink = "https://www.scrapingbee.com/#pricing"

In some cases, href doesn’t start with either “/” or “https”; in that case, the command combines the path with that link.

For example, href is like below:

<a href="mailto:support@scrapingbee.com?subject=Enterprise plan&body=Hi there, I'd like to discuss the Enterprise plan." class="btn btn-sm btn-black-o w-full mt-13">1-click quote</a>

Now let’s complete the code with the following command:

if not weblink in unscraped_url and not weblink in scraped_url: unscraped_url.append(weblink) print(list_emails)

The above command appends URLs not scraped to the unscraped url variable. To view the results, print the list_emails.

Run the program.

What if the program doesn’t work?

Are you getting errors or exceptions of Missing Schema, Connection Error, or Invalid URL?

Some of the websites you aren’t able to access for some reason.

Don’t worry! Let’s see how to hit these errors.

Use the Try Exception command to bypass the errors as shown below:

try: response = requests.get(url)

except (requests.exceptions.MissingSchema, requests.exceptions.ConnectionError, requests.exceptions.InvalidURL): continue

Insert the command before the email regex command. Precisely, place this command above the new_emails variable.

Run the program now.

Did the program work?

Does it keep on running for several hours and not complete it?

The program searches and extracts all the URLs from the given website. Also, It is extracting links from other domain name websites. For example, the Scraping Bee website has URLs such as https://seekwell.io/., https://codesubmit.io/, and more.

A well-built website has up to 100 links for a single page of a website. So the program will take several hours to extract the links.

Sorry about it. You have to face this issue to get your target emails.

Bye Bye, the article ends here……..

No, I am just joking!

Fret Not! I will give you the best solution in the next step.

Step 8: Fix the code problems.

Here is the solution code for you:

if base_url in weblink: # code1 if ("contact" in weblink or "Contact" in weblink or "About" in weblink or "about" in weblink or 'CONTACT' in weblink or 'ABOUT' in weblink or 'contact-us' in weblink): #code2 if not weblink in unscraped_url and not weblink in scraped_url: unscraped_url.append(weblink)

First off, apply code 1, which specifies that you only include base URL websites from links weblinks to prevent scraping other domain name websites from a specific website.

Since the majority of emails are provided on the contact us and about web pages, only those links from those sites will be extracted (Refer to code 2). Other pages are not considered.

Finally, unscraped URLs are added to the unscrapped_url variable.

Step 9: Exporting the Email Address to CSV file.

Finally, we can save the email address in a CSV file (email2.csv) through data frame pandas.

url_name = "{0.netloc}".format(parts)

col = "List of Emails " + url_name

df = pd.DataFrame(list_emails, columns=[col])

s = get_fld(base_url)

df = df[df[col].str.contains(s) == True]

df.to_csv('email2.csv', index=False)

We use get_fld to save emails belonging to the first level domain name of the base URL. The s variable contains the first level domain of the base URL. For example, the first level domain is scrapingbee.com.

We include only emails ending with the website’s first-level domain name in the data frame. Other domain names that do not belong to the base URL are ignored. Finally, the data frame transfers emails to a CSV file.

As previously stated, a web admin can maintain up to 100 links per page.

Because there are more than 30 hyperlinks on each page for a normal website, it will still take some time to finish the program. If you believe that the software has extracted enough email, you may manually halt it using try except KeyboardInterrupt and raise SystemExit command as shown below:

try:

while len(unscraped_url):

… if base_url in weblink: if ("contact" in weblink or "Contact" in weblink or "About" in weblink or "about" in weblink or 'CONTACT' in weblink or 'ABOUT' in weblink or 'contact-us' in weblink): if not weblink in unscraped_url and not weblink in scraped_url: unscraped_url.append(weblink) url_name = "{0.netloc}".format(parts) col = "List of Emails " + url_name df = pd.DataFrame(list_emails, columns=[col]) s = get_fld(base_url) df = df[df[col].str.contains(s) == True] df.to_csv('email2.csv', index=False) except KeyboardInterrupt: url_name = "{0.netloc}".format(parts) col = "List of Emails " + url_name df = pd.DataFrame(list_emails, columns=[col]) s = get_fld(base_url) df = df[df[col].str.contains(s) == True] df.to_csv('email2.csv', index=False) print("Program terminated manually!") raise SystemExit

Run the program and enjoy it…

Let’s see what our fantastic email scraper application produced. The website I have entered is www.abbott.com.

Output:

Method 2 Indirect Email Extraction

You will learn the steps to extract email addresses from Google.com using the second method.

Step 1: Install Libraries.

Using the pip command, install the following Python libraries:

bs4is a Beautiful soup for extracting google pages.- The

pandasmodule can save emails in a DataFrame for future processing. - You can use Regular Expression (

re) to match the Email Address format. - The

requestlibrary sends HTTP requests. - You can use

tldlibrary to acquire relevant emails. timelibrary to delay the scraping of pages.

pip install bs4

pip install pandas

pip install re

pip install request

pip install time

Step 2: Import Libraries.

Import the libraries.

from bs4 import BeautifulSoup

import pandas as pd

import re

import requests

from tld import get_fld

import time

Step 3: Constructing Search Query.

The search query is written in the format “@websitename.com“.

Create an input for the user to enter the URL of the website.

user_keyword = input("Enter the Website Name: ")

user_keyword = str('"@') + user_keyword +' " '

The format of the search query is “@websitename.com,” as indicated in the code for the user_keyword variable above. The search query has opening and ending double quotes.

Step 4: Define Variables.

Before moving on to the heart of the program, let’s first set up the variables.

page = 0

list_email = set()

You can move through multiple Google search results pages using the page variable. And list_email for extracted emails set.

Step 5: Requesting Google Page.

In this step, you will learn how to create a Google URL link using a user keyword term and request the same.

The Main part of coding starts as below:

while page <= 100: print("Searching Emails in page No " + str(page)) time.sleep(20.00) google = "https://www.google.com/search?q=" + user_keyword + "&ei=dUoTY-i9L_2Cxc8P5aSU8AI&start=" + str(page) response = requests.get(google) print(response)

Let’s examine what each line of code does.

- The

whileloop enables the email extraction bot to retrieve emails up to a specific number of pages, in this case 10 Pages. - The code prints the page number of the Google page being extracted. The first page is represented by page number 0, the second by page 10, the third by page 20, and so on.

- To prevent having Google’s IP blocked, we slowed down the programming by 20 seconds and requested the URLs more slowly.

Before creating a google variable, let us learn more about the google search URL.

Suppose you search the keyword “Germany” on google.com. Then the Google search URL will be as follows

If you click the second page of the Google search result, then the link will be as follows:

How does that link work?

- The user keyword is inserted after the “

q=” symbol, and the page number is added after the “start=” as shown above in the google variable. - Request a Google webpage after that, then print the results. To test whether it’s functioning or not. The website was successfully accessed if you received a 200 response code. If you receive a 429, it implies that you have hit your request limit and must wait two hours before making any more requests.

Step 6: Extracting Email Address.

In this step, you will learn how to extract the email address from the google search result contents.

soup = BeautifulSoup(response.text, 'html.parser')

new_emails = ((re.findall(r"\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b", soup.text, re.I)))

list_email.update(new_emails)

page = page + 10

The Beautiful soup parses the web page and extracts the content of html web page.

With the regex findall() function, you can obtain email addresses, as shown above. Then the new email is updated to the list_email set. The page is added to 10 for navigating the next page.

n = len(user_keyword)-1

base_url = "https://www." + user_keyword[2:n]

col = "List of Emails " + user_keyword[2:n]

df = pd.DataFrame(list_email, columns=[col])

s = get_fld(base_url)

df = df[df[col].str.contains(s) == True]

df.to_csv('email3.csv', index=False)

And finally, target emails are saved to the CSV file from the above lines of code. The list item in the user_keyword starts from the 2nd position until the domain name.

Run the program and see the output.

Method 1 Vs. Method 2

Can we determine which approach is more effective for building an email list: Method 1 Direct Email Extraction or Method 2 Indirect Email Extraction? The output’s email list was generated from the website abbot.com.

Let’s contrast two email lists that were extracted using Methods 1 and 2.

- From Method 1, the extractor has retrieved 60 emails.

- From Method 2, the extractor has retrieved 19 emails.

- The 17 email lists in Method 2 are not included in Method 1.

- These emails are employee-specific rather than company-wide. Additionally, there are more employee emails in Method 1.

Thus, we are unable to recommend one procedure over another. Both techniques provide fresh email lists. As a result, both of these methods will increase your email list.

Summary

Building an email list is crucial for businesses and freelancers alike to increase sales and leads.

This article offers instructions on using Python to retrieve email addresses from websites.

The best two methods to obtain email addresses are provided in the article.

In order to provide a recommendation, the two techniques are finally compared.

The first approach is a direct email extractor from any website, and the second method is to extract email addresses using Google.com.

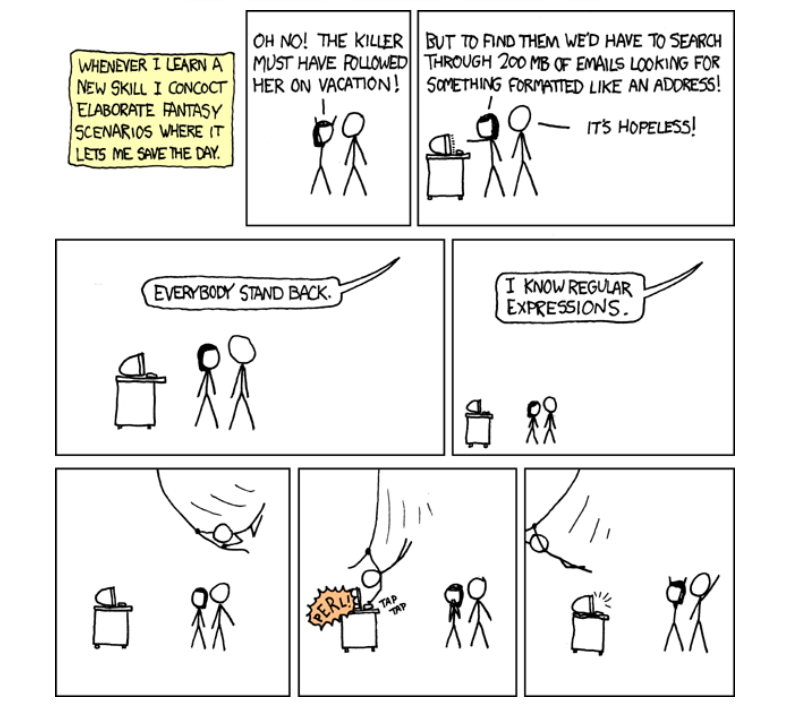

Regex Humor

https://www.sickgaming.net/blog/2022/09/...ng-python/